29.03.2015 FLOBOT

Washing Robot for Professional Users

Supermarkets, as well as other industrial, commercial, civil and service premises such as Airports, Trade fairs, Hospitals, Sport courts have huge floor surfaces to be cleaned daily and infra-daily. These activities are time demanding in terms of human repetitive activities. They take place at various times, often with a tight schedule depending on the kind of premise and available time slots as well as organisational issues of the tenant of the premise; often the personnel have to be present on standby on the premises to be cleaned. Additionally, cleaning services often have problems related to workers’ health and ergonomics. The economic viability of the companies commonly relies on low wages and low-skills personnel. Therefore the floor washing activities are best suited for robotisation.

Currently, it does not exist a robot that satisfies the requirements of the professional users and cleaning services companies in terms of both cleaning performance and in terms of cost. The robotised floor washing tasks are demanding under many aspects: autonomy of operation, precision of the navigation, safety with regards to humans and goods, interaction with the personnel, easy set-up of path and tasks sequences without complex reprogramming.

FLOBOT addresses these problems integrating existing research results into a professional floor washing robot platform for wide area industrial, civil and commercial premises. The result will be a TRL-8 prototype, tested in a real operating environment. The project derives requirements from professional users and implements case validations in four kinds of premises.

The FLOBOT will be a professional robot scrubber with suction to dry the floor. The system consists of a mobile platform (robot) and of a docking station. It includes software modules for human tracking for safety, floor cleaning intelligence, navigation and mapping, mission programming and connection to the data management ERP of the users.

Funding

- Horizon 2020 Programme (H2020-ICT-2014-1, Grant agreement no: 645376).

14.07.2014 Crazy Robots

Crazy Robots is a science communication project of the Vision for Robotics Group, funded by FWF Wissenschaftsfonds. The project aims to teach school students robotics from the product development perspective and addresses all students (not only the ones interested in Science, Technology Engineering, and Mathematics – STEM). A specific feature of this project is the user-centered design approach which focuses on the interests of students and teachers and involves other stakeholders like parents.

The concept is based on the “5-step plan” and the “project assignment with Mattie robot”. Both concepts and the robot were developed for children. The researchers follow two main goals:

- The project introduces students, teachers (and interested parents) to robotics by offering different perspectives, e.g. not only the technical, but also social and economical perspectives. Furthermore, students are encouraged to find ways to apply their interests and talents in robotics fields.

- Along the way, the project evaluates the effectiveness of the concept and, if given, the applicability of the ideas to real products or services in robotic fields.

The project started on March 2014. After a phase of planning and development pilot schools were contacted. Since October 2014 the implementation is under way. Five high schools from Vienna take part in the project with a second or third class (and their teachers from handiwork or physics).

In detail the project covers following: each class learns about the product development process of robots in three workshops during one semester. Each of these workshops symbolizes an incisive phase of product development.

It starts with ideation in the first workshop “concept”, where students develop their first robot ideas with the help of the 5-step plan – a simple yet effective structure for their ideas – which they convert into a clay model.

For the second workshop “experts”, the class visits the Vision for Robotics Lab and meets robotics experts who talk about their work – computer vision – during a demo. After this, the students receive a project assignment from the CEO of the Crazy Robots Inc. to build a first prototype of a robot for kids. Each student can decide to be in one of the following teams: engineering, human-robot interaction, research&development, design or sales&marketing.

In the third workshop “evaluation”, the different teams reunite and integrate the robots parts. Then, the prototype is evaluated from two perspectives: technical and user. Finally, the product idea is presented to the CEO and the prototype is demonstrated. Between workshops, teachers have the possibility to discuss lessons learned in class.

08.06.2014 SQUIRREL

Clearing Clutter Bit by Bit

Clutter in an open world is a challenge for many aspects of robotic systems, especially for autonomous robots deployed in unstructured domestic settings, affecting navigation, manipulation, vision, human robot interaction and planning.

Squirrel addresses these issues by actively controlling clutter and incrementally learning to extend the robot’s capabilities while doing so. We term this the B3 (bit by bit) approach, as the robot tackles clutter one bit at a time and also extends its knowledge continuously as new bits of information become available. Squirrel is inspired by a user driven scenario, that exhibits all the rich complexity required to convincingly drive research, but allows tractable solutions with high potential for exploitation. We propose a toy cleaning scenario, where a robot learns to collect toys scattered in loose clumps or tangled heaps on the floor in a child’s room, and to stow them in designated target locations.

We will advance science w.r.t. manipulation, where we will incrementally learn grasp affordances with a dexterous hand; segmenting and learning objects and object category models from a cluttered scene; localisation and navigation in a crowded and changing scene based on incrementally built 3D environment models; iterative task planning in an open world; and engaging with multiple users in a dynamic collaborative task.

Progress will be measured in scenarios of increasing complexity, starting with known object classes, via incremental learning of objects and grasp affordances to the full system with failure recovery and active control of clutter, instantiated on two different robot platforms. Systems will be evaluated in nurseries and day cares, where children playfully engage with the robot to teach it to how to clean up.

Partners

- Technische Universität Wien, Institut für Automatisierungs- und Regelungstechnik

- Albert-Ludwigs-Universität Freiburg

- Universität Innsbruck

- King’s College London

- University of Twente

- Frauenhofer IPA

- Festo Didactic

- IDMind

- Verein Pädagogische Initiative 2-10

Funding

FP7 No. 610532.

08.06.2014 Argonauts

Argo Challenge von Total

Total has launched in December 2013, the ARGOS Challenge (Autonomous Robot for Gas and Oil Sites), an international robotics competition designed to foster the development of a new generation of autonomous robots adapted to the oil and gas sites. These robots will be capable of performing inspection tasks, detecting anomalies and intervening in emergency situations.

In June 2014, five teams from Austria, Spain, France, Japan and Switzerland were selected to take part in the ARGOS challenge. They have less than three years to design and build the first autonomous surface robot complying with ATEX/ IECEx standards*. The robot will be able to operate in specific environments encountered in the oil and gas industry.

By launching this challenge in partnership with the French National Research Agency (ANR), Total has adopted an open innovation strategy. The aim was to involve associations in robotics, partly to make them more aware of the operating constraints encountered in oil and gas production activities, and partly to encourage them to suggest innovative robotics solutions, providing answers to the problems we encounter, and increasing safety for our personnel.

Partners

5 teams Argonauts, Foxiris, Vikings, Air-K and Lio and come from Austria, Spain, France, Japan and Switzerland

Funding

Total Challenge operated by ANR (Total) and EuroSTAR

14.07.2013 FRANC

Field Robot for Advanced Navigation in Bio Crops

While in modern agriculture increasingly powerful complex machines with advanced technology are used organic farming is characterized by multiple manual tasks. The project FRANC develops and builds an autonomous vehicle which can be used especially in organic farming. The vehicle is equipped with the necessary sensor technology and control hard- and software to autonomously drive in row crops. By steerable front and rear wheels a tight turning radius will be possible. A modular design of the vehicle allows easy and flexible adaptations for different applications and requirements. In order to ensure that the vehicle can be stopped immediately and brought to a save operation mode a safety concept is developed. Regarding the safety system, it is assumed that the vehicle does not operate unattended and that a remote controller allows to interference with the automatic system at any time.

Within this project, the school is introduced to an advanced technology in agricultural engineering. It is primarily intended to arouse students for the interest in robotics. The use of modern technologies (sensors, navigation, control technics , …) can be taught in a clear way with foundation in a real application. The trend in agricultural farming is towards diagnosis and treatment of individual plants. Thus a huge amount of research questions are far beyond the project’s goals. This project builds the basis for a future and long-term collaboration.

Partners

- Technische Universität Wien, Institut für Automatisierungs- und Regelungstechnik

- Josephinum Research

- Hochschule Osnabrück, Fakultät Ingenieurwissenschaften und Informatik

- Bio Lutz GmbH

- BLT Wieslburg

- Höhere Bundeslehr- und Forschungsanstalt Francisco Josephinum

- Höhere Technische Bundeslehr- und Versuchsanstalt Waidhofen an der Ybbs

Funding

FRANC is funded by Sparkling Science, a programme of the Federal Ministry of Science and Research of Austria.

09.06.2013 STRANDS

Spatio-Temporal Representations and Activities for Cognitive Control in Long-Term Scenarios

STRANDS aims to enable a robot to achieve robust and intelligent behaviour in human environments through adaptation to, and the exploitation of, long-term experience. Our approach is based on understanding 3D space and how it changes over time, from milliseconds to months.

We will develop novel approaches to extract quantitative and qualitative spatio-temporal structure from sensor data gathered during months of autonomous operation. Extracted structure will include reoccurring geometric primitives, objects, people, and models of activity. We will also develop control mechanisms which exploit these structures to yield adaptive behaviour in highly demanding, real-world security and care scenarios.

The spatio-temporal dynamics presented by such scenarios (e.g. humans moving, furniture changing position, objects (re-)appearing) are largely treated as anomalous readings by state-of-the-art robots. Errors introduced by these readings accumulate over the lifetime of such systems, preventing many of them from running for more than a few hours. By autonomously modelling spatio-temporal dynamics, our robots will be able run for significantly longer than current systems (at least 120 days by the end of the project). Long runtimes provide previously unattainable opportunities for a robot to learn about its world. Our systems will take these opportunities, advancing long-term mapping, life-long learning about objects, person tracking, human activity recognition and self-motivated behaviour generation.

We will integrate our advances into complete cognitive systems to be deployed and evaluated at two end-user sites. The tasks these systems will perform are impossible without long-term adaptation to spatio-temporal dynamics, yet they are tasks demanded by early adopters of cognitive robots. We will measure our progress by benchmarking these systems against detailed user requirements and a range of objective criteria including measures of system runtime and autonomous behaviour.

Partners

- Technische Universität Wien, Institut für Automatisierungs- und Regelungstechnik

- University of Birmingham

- Akademie für Altersforschung am Haus der Barmherzigkeit

- G4S Technology Ltd

- Kungliga Tekniska Högskolan

- Rheinisch-Westfäische Technische Hochschule Aachen

- University of Leeds

- University of Lincoln

Funding

FP7 no. 600623

01.01.2013 V4HRC

Human-Robot Cooperation: Perspective Sharing

Imagine a human and a robot performing a joint assembly task such as assembling a shelf. This task clearly requires a number of sequential or parallel actions and visual information about the context to be negotiated and performed in coordination by the partners.

However, human vision and machine vision differ. On one hand, computer vision systems are precise, achieve repeatable results, and are able to perceive wavelengths invisible to humans. On the other hand, sense-making of a picture or scenery can be considered a typical human trait. So how should the cooperation in a vision-based task (e.g. building something together out of Lego bricks) work for a human-robot team, if they do not have a common perception of the world?

There is a need for grounding in human-robot cooperation. In order to achieve this we have to combine the strengths of human beings (e.g. problem solving, sense making, and the ability to make decisions) with the strengths of robotics (e.g. omnivisual cameras, consistency of vision measures, and storage of vision data).

Therefore, the aim is to explore how human dyads cooperate in vision-based tasks and how they achieve grounding. The findings from human dyad will then be transferred in an adapted manner to human-robot interaction in order to inform the behavior implementation of the robot. Human and machine vision will be bridged by letting the human “see through the robot’s eyes” at identified moments, which could increase the collaboration performance. User studies using the Wizard-of-Oz technique (the robot is not acting autonomously, but is remote-controlled by a “wizard” behind the scenes) will be conducted and assessed in terms of user satisfaction. The results of human-human dyads and human-robot teams will be compared regarding performance and quality criteria (usability, user experience, and social acceptance), in order to gain an understanding of what makes human-robot cooperation perceived as satisfying for the user. As there is evidence in Human-Robot Interaction research that the cultural-background of the participants and the embodiment of the robot can influence the perception and performance of human-robot collaboration, comparison studies in the USA an Japan will be conducted to explore if this holds true for vision-based cooperation tasks in the final stage of the research undertaking. With this approach, it can be systematically explored how grounding in human-robot vision can be achieved. As such, the research proposed in this project is vital for future robotic vision projects where it is expected that robots share an environment and have to jointly perform tasks. The project follows a highly interdisciplinary approach and brings together research aspects from sociology, computer science, cognitive science, and robotics.

14.07.2011 HOBBIT

The Mutual Care Robot

Ageing has been prioritised as a key demographic element affecting the population development within the EU member states. Experts and users agree that Ambient Assisted Living (AAL) and Social and Service Robots (SSR) have the potential to become key components in coping with Europe’s demographic changes in the coming years. From all past experiences with service robots, it is evident that acceptance, usability and affordability will be the prime factors for any successful introduction of such technology into the homes of older people.

While world players in home care robotics tend to follow a pragmatic approach such as single function systems (USA) or humanoid robots (Japan, Korea), we introduce a new, more user-centred concept called “Mutual Care”: By providing a possibility for the Human to “take care” of the robot like a partner, real feelings and affections toward it will be created. It is easier to accept assistance from a robot when in certain situations, the Human can also assist the machine. In turn, older users will more readily accept the help of the HOBBIT robot. Close cooperation with institutional caregivers will enable the consortium to continuously improve acceptance and usability.

In contrast to current approaches, HOBBIT, the mutual care robot, will offer practical and tangible benefits for the user with a price tag starting below EUR 14.000 . This offers the realistic chance for HOBBIT to refinance itself in about 18-24 months (in comparison with nursing institutions or 24hr home care). In addition, HOBBIT has the potential to delay institutionalisation of older persons by at least two years which will result in a general strengthening of the competitiveness of the European economy. We will insure that the concept of HOBBIT seeds a new robotic industry segment for aging well in the European Union. The use of standardised industrial components and the participation of two leading industrial partners will ensure the exploitation of HOBBIT.

26.07.2008 Meta Mechanics

The metamechanics RoboCup@Home team was established in late 2008 at the TU Wien. It is a mixed team of the Faculty of Electrical Engineering and Information Technology and the Department of Computer Science. In 2009 the metamechanics plan to participate in Graz (World Cup) and German Open 2009 for the first time.

The team consists of a mixture of Bachelor, Master and PhD students, which are advised by professors from the university.

14.07.2008 GRASP

Emergence of Cognitive Grasping through Emulation, Introspection, and Surprise

The aim of GRASP is the design of a cognitive system capable of performing tasks in open-ended environments, dealing with uncertainty and novel situations. The design of such a system must take into account three important facts: i) it has to be based on solid theoretical basis, and ii) it has to be extensively evaluated on suitable and measurable basis, thus iii) allowing for self-understanding and self-extension.

We have decided to study the problem of object manipulation and grasping, by providing theoretical and measurable basis for system design that are valid in both human and artificial systems. We believe that this is of utmost importance for the design of artificial cognitive systems that are to be deployed in real environments and interact with humans and other artificial agents. Such systems need the ability to exploit the innate knowledge and self-understanding to gradually develop cognitive capabilities. To demonstrate the feasibility of our approach, we will instantiate, implement and evaluate our theories on robot systems with different emobodiments and levels of complexity. These systems will operate in real-world scenarios, with and without human intervention and tutoring.

GRASP will develop means for robotic systems to reason about graspable targets, to explore and investigate their physical properties and finally to make artificial hands grasp any object. We will use theoretical, computational and experimental studies to model skilled sensorimotor behavior based on known principles governing grasping and manipulation tasks performed by humans. Therefore, GRASP sets out to integrate a large body of findings from disciplines such as neuroscience, cognitive science, robotics, multi-modal perception and machine learning to achieve a core capability: Grasping any object by building up relations between task setting, embodied hand actions, object attributes, and contextual knowledge.

Goals

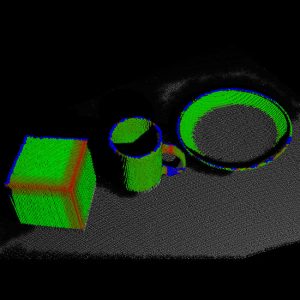

Develop computer vision methods to detect grasping points on any objects to grasp any object. At project end we want to show that a basket filled with everyday objects can be emptied by the robot, even if it has never seen some of the objects before. Hence it is necessary to develop the vision methods as well as link percepts to motor commands via an ontology that represents the grasping knowledge relating object properties such as shape, size, and orientation to hand grasp types and posture and the relation to the task.

Our (TUW) tasks/goals in this project are:

- [Task1] – Acquiring (perceiving, formalising) knowledge through hand-environment interaction:

The objective is to combine expectations from previous grasping experiences with the actual percepts of the present and actual grasping action. Hence we investigate a plethora of cues and features to be able to extract the set of relevant cues related to the grasping task. We will study edge structure features and grouping to objects, surface reconstruction and tracking, figure/ground segmentation, shape from edge and surfaces, recognition/classification of objects, spatio-temporal and pose relations handobject, multimodal grounding and uncertainties of geometric attributes leading to low level surprise detection and the integration or synthesis in the ontology including the combination with prediction (TUM, TUW). - [Task2] – Perceiving task relations and affordances: The objective is to exploit the set of features extracted in Task 4.1 to obtain a vocabulary of features relevant to the grasping of objects and to learn the feature relations to the potential grasping behaviours and types. These relations will form part of the grasping ontology. Furthermore, the goal is to obtain a hierarchical structure or abstraction of features, such that new objects can be related to this hierarchy. The approach sets out to obtain an asymptotic behaviour for new objects, such that early on extensions are frequently necessary, while over the course of learning more and more objects are known how to be grasped. Finally, the features will be used to propose potential actions (affordances) and are used to invoke the grasping cycle.

- [Task3] – Linking structure, affordance, action and task: The objective is to provide the necessary input to the grasping ontology, which holds in a relational graph or database the grasping experiences learned. It contains an abstraction formed over specific behaviours and sub-parts of reaching and grasping actions. It models relations and constraints to (1) the object and its properties such as size, shape and weight, to (2) perceived affordances (potentialities for actions) and grasping points, to (3) the task that is executed, e.g., grasping for pick up or to move as cup, and to (4) the context or surrounding of relevance, e.g., obstacles to circumnavigate or surfaces to place the object. It will be investigated how such a link can be efficiently established, how the plasticity of the link can be achieved to enable learning and multiple cross-references, and how this can form a hierarchy of behaviours and links to efficiently represent different grasp types/relations exploiting the vocabulary to achieve extendibility to grasp new objects.

Partners

- Kungliga Tekniska Högskolan, Stockholm, Sweden

- Universität Karlsruhe, Karlsruhe, Germany

- Technische Universität München, Munich, Germany

- Lappeenranta University of Technology, Lappeenranta, Finland

- Foundation for Research and Technology – Hellas, Greece

- Universitat Jaume I, Castellón, Spain

- Otto Bock, GmbH, Austria OB