CogX

Cognitive Systems that Self-Understand and Self-Extend

A specific, if very simple example of the kind of task that we will tackle is a domestic robot assistant or gopher that is asked by a human to: “Please bring me the box of cornflakes.” There are many kinds of knowledge gaps that could be present (we will not tackle all of these):

- What this particular box looks like.

- Which room this particular item is currently in.

- What cereal boxes look like in general.

- Where cereal boxes are typically to be found within a house.

- How to grasp this particular packet.

- How to grasp cereal packets in general.

- What the cornflakes box is to be used for by the human.

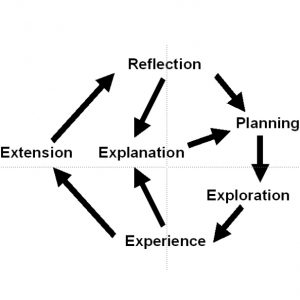

The robot will have to fill the knowledge gaps necessary to complete the task, but this also offers opportunities for learning. To self-extend, the robot must identify and exploit these opportunities. We will allow this learning to be curiosity driven. This provides us, within the confines of our scenario, with the ability to study mechanisms able to generate a spectrum of behaviours from purely task driven information gathering to purely curiosity driven learning. To be flexible the robot must be able to do both. It must also know how to trade-off efficient execution of the current task – find out where the box is and get it – against curiosity driven learning of what might be useful in future – find out where you can usually find cereal boxes, or spend time when you find it performing grasps and pushes on it to see how it behaves. One extreme of the spectrum we can characterise as a task focused robot assistant, the other as a kind of curious robotic child scientist that tarries while performing its assigned task in order to make discoveries and experiments. One of our objectives is to show how to embed both these characteristics in the same system, and how architectural mechanisms can allow an operator – or perhaps a higher order system in the robot – to alter their relative priority, and thus the behaviour of the robot.

Goals

The ability to manipulate novel objects detected in the environment and to predict their behaviour after a certain action is applied to them is important for a robot that can extend its own abilities. The goal is to provide the necessary sensory input for the above by exploiting the interplay between perception and manipulation. We will develop robust, generalisable and extensible manipulation strategies based on visual and haptic input. We envisage two forms of object manipulation: pushing using a “finger” containing a force-torque sensor and grasping using a parallel jaw gripper and a three-finger hand. Through coupling of perception and action we will thus be able to extract additional information about objects, e.g. weight, and reason about object properties such as empty or full.

[Task1] – Contour based shape representations. Investigate methods to robustly extract object contours using edge-based perceptual grouping methods. Develop representations of 3D shape based on contours of different views of the object, as seen from different camera positions or obtained by the robot holding and turning the object actively. Investigate how to incorporate learned perceptual primitives and spatial relations. (M1 – M12)

[Task2] – Early grasping strategies. Based on the visual sensory input extracted in Task 1, define motor representations of grasping actions for two and three-fingered hands. The initial grasping strategies will be defined by a suitable approach vector (relative pose wrt object/grasping part) and preshape strategy (grasp type). (M7 – M18)

[Task3] – Active segmentation. Use haptic information, pushing and grasping actions i) for interactive scene segmentation into meaningful objects, and ii) for extracting a more detailed model of the object (visual and haptic). Furthermore use information inside regions (surface markings, texture, shading) to complement contour information and build denser and more accurate models. (M13 – M36)

[Task4] – Active Visual Search. Survey the literature and evaluate different methods for visual object search in realistic environments with a mobile robot. Based on this survey develop a system that can detect and recognise objects in a natural (possibly simplified) environment. (M1- M24)

[Task5] – Object based spatial modeling Investigate how to include objects into the spatial representation such that properties of the available vision systems are captured and taken into account. The purpose of this task is to develop a framework that allows for a hybrid representation where objects and traditional metric spatial models can coexist. (M7 – M24)

[Task6] – Functional understanding of space Investigate by analyzing spatial models over time. (M24 – M48)

[Task7] – Grasping novel objects. Based on the object models acquired, we will investigate the scalability of the system with respect to grasping novel, previously unseen objects. We will demonstrate how the system can execute tasks that involve grasping based on the extracted sensory input (both about scene and individual objects) and taking into account its embodiment. (M25 – M48)

[Task8] – Theory revision. Given a qualitative, causal physics model, the robot should be able to revise its causal model by its match or mismatch with the qualitative object behaviour. When qualitative predictions are incorrect the system will identify where the gap is in the model, and generate hypotheses for actions that will fill in these gaps. (M37 – M48)

[Task9] – Representations of gaps in object knowledge and manipulation skills Enabling all models of objects and grasps to also represent missing knowledge is a necessary prerequisite to reason about information-gathering actions and to represent beliefs about beliefs and is therefore an ongoing task throughout the project. (M1 – 48)

Partners

- University of Birmingham BHAM United Kingdom

- Deutsches Forschungszentrum für Künstliche Intelligenz GmbH

- DFKI Germany

- Kungliga Tekniska Högskolan KTH Sweden

- Univerza v Ljubljani UL Slovenia

- Albert-Ludwigs-Universität ALU-FR Germany