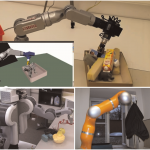

InDex

Robot In-hand Dexterous manipulation by extracting data from human manipulation of objects to improve robotic autonomy and dexterity

The InDex project aims to understand how humans perform in-hand object manipulation and to replicate the observed skilled movements with dexterous artificial hands, merging the concepts of deep reinforcement and transfer learning to generalise in-hand skills for multiple objects and tasks. In addition, an abstraction and representation of previous knowledge will be fundamental for the reproducibility of learned skills to different hardware. Learning will use data across multiple modalities that will be collected, annotated, and assembled into a large dataset. The data and our methods will be shared with the wider research community to allow testing against benchmarks and reproduction of results. The core objectives are: (i) to build a multi-modal artificial perception architecture that extracts data of object manipulation by humans; (ii) the creation of a multimodal dataset of in-hand manipulation tasks such as regrasping, reorienting and finely repositioning; (iii) the development of an advanced object modelling and recognition system, including the characterisation of object affordances and grasping properties, in order to encapsulate both explicit information and possible implicit object usages; (iv) to autonomously learn and precisely imitate human strategies in handling tasks; and (v) to build a bridge between observation and execution, allowing deployment that is independent of the robot.

FUNDING:

The project is funded by FWF – Austrian Science Foundation & CHIST-ERA.