GRASP

Emergence of Cognitive Grasping through Emulation, Introspection, and Surprise

The aim of GRASP is the design of a cognitive system capable of performing tasks in open-ended environments, dealing with uncertainty and novel situations. The design of such a system must take into account three important facts: i) it has to be based on solid theoretical basis, and ii) it has to be extensively evaluated on suitable and measurable basis, thus iii) allowing for self-understanding and self-extension.

We have decided to study the problem of object manipulation and grasping, by providing theoretical and measurable basis for system design that are valid in both human and artificial systems. We believe that this is of utmost importance for the design of artificial cognitive systems that are to be deployed in real environments and interact with humans and other artificial agents. Such systems need the ability to exploit the innate knowledge and self-understanding to gradually develop cognitive capabilities. To demonstrate the feasibility of our approach, we will instantiate, implement and evaluate our theories on robot systems with different emobodiments and levels of complexity. These systems will operate in real-world scenarios, with and without human intervention and tutoring.

GRASP will develop means for robotic systems to reason about graspable targets, to explore and investigate their physical properties and finally to make artificial hands grasp any object. We will use theoretical, computational and experimental studies to model skilled sensorimotor behavior based on known principles governing grasping and manipulation tasks performed by humans. Therefore, GRASP sets out to integrate a large body of findings from disciplines such as neuroscience, cognitive science, robotics, multi-modal perception and machine learning to achieve a core capability: Grasping any object by building up relations between task setting, embodied hand actions, object attributes, and contextual knowledge.

Goals

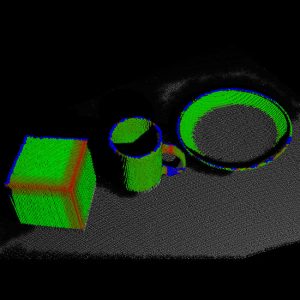

Develop computer vision methods to detect grasping points on any objects to grasp any object. At project end we want to show that a basket filled with everyday objects can be emptied by the robot, even if it has never seen some of the objects before. Hence it is necessary to develop the vision methods as well as link percepts to motor commands via an ontology that represents the grasping knowledge relating object properties such as shape, size, and orientation to hand grasp types and posture and the relation to the task.

Our (TUW) tasks/goals in this project are:

- [Task1] – Acquiring (perceiving, formalising) knowledge through hand-environment interaction:

The objective is to combine expectations from previous grasping experiences with the actual percepts of the present and actual grasping action. Hence we investigate a plethora of cues and features to be able to extract the set of relevant cues related to the grasping task. We will study edge structure features and grouping to objects, surface reconstruction and tracking, figure/ground segmentation, shape from edge and surfaces, recognition/classification of objects, spatio-temporal and pose relations handobject, multimodal grounding and uncertainties of geometric attributes leading to low level surprise detection and the integration or synthesis in the ontology including the combination with prediction (TUM, TUW). - [Task2] – Perceiving task relations and affordances: The objective is to exploit the set of features extracted in Task 4.1 to obtain a vocabulary of features relevant to the grasping of objects and to learn the feature relations to the potential grasping behaviours and types. These relations will form part of the grasping ontology. Furthermore, the goal is to obtain a hierarchical structure or abstraction of features, such that new objects can be related to this hierarchy. The approach sets out to obtain an asymptotic behaviour for new objects, such that early on extensions are frequently necessary, while over the course of learning more and more objects are known how to be grasped. Finally, the features will be used to propose potential actions (affordances) and are used to invoke the grasping cycle.

- [Task3] – Linking structure, affordance, action and task: The objective is to provide the necessary input to the grasping ontology, which holds in a relational graph or database the grasping experiences learned. It contains an abstraction formed over specific behaviours and sub-parts of reaching and grasping actions. It models relations and constraints to (1) the object and its properties such as size, shape and weight, to (2) perceived affordances (potentialities for actions) and grasping points, to (3) the task that is executed, e.g., grasping for pick up or to move as cup, and to (4) the context or surrounding of relevance, e.g., obstacles to circumnavigate or surfaces to place the object. It will be investigated how such a link can be efficiently established, how the plasticity of the link can be achieved to enable learning and multiple cross-references, and how this can form a hierarchy of behaviours and links to efficiently represent different grasp types/relations exploiting the vocabulary to achieve extendibility to grasp new objects.

Partners

- Kungliga Tekniska Högskolan, Stockholm, Sweden

- Universität Karlsruhe, Karlsruhe, Germany

- Technische Universität München, Munich, Germany

- Lappeenranta University of Technology, Lappeenranta, Finland

- Foundation for Research and Technology – Hellas, Greece

- Universitat Jaume I, Castellón, Spain

- Otto Bock, GmbH, Austria OB