BLORT

The Blocks World Robotic Vision Toolbox

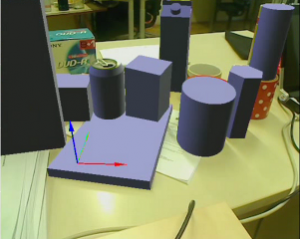

The vision and robotics communities have developed a large number of increasingly successful methods for tracking, recognising and on-line learning of objects, all of which have their particular strengths and weaknesses. A researcher aiming to provide a robot with the ability to handle objects will typically have to pick amongst these and engineer a system that works for her particular setting. The work presented in this paper aims to provide a toolbox to simplify this task and to allow handling of diverse scenarios, though of course we have our own particular limitations: The toolbox is aimed at robotics research and as such we have in mind objects typically of interest for robotic manipulation scenarios, e.g. mugs, boxes and packaging of various sorts. We are not aiming to cover articulated objects (such as walking humans), highly irregular objects (such as potted plants) or deformable objects (such as cables). The system does not require specialised hardware and simply uses a single camera allowing usage on about any robot. The toolbox integrates state-of-the art methods for detection and learning of novel objects, and recognition and tracking of learned models. Integration is currently done via our own modular robotics framework, but of course the libraries making up the modules can also be separately integrated into own projects.

Author(s)

Michael Zillich – zillich(at)acin.tuwien.ac.at

Thomas Mörwald – moerwald(at)acin.tuwien.ac.at

Johann Prankl – prankl(at)acin.tuwien.ac.at

Andreas Richtsfeld – ari(at)acin.tuwien.ac.at

Source Code

For installation follow the README provided in the package.

Current Stable Release

Older Releases

Videos

BLORT – The Blocks World Robotic Vision Toolbox

BLORT.mpg (19 MB) BLORT.avi (13 MB)

Shows learning, recognising and tracking of objects using BLORT.

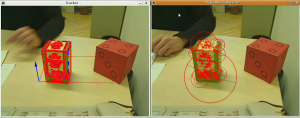

Detection and tracking

detect-track.mpg

Shows an object placed on a textured ground plane. It is detected and subsequently tracked based on texture edges. Matching texture edges are shown in red, mismatches blue in.

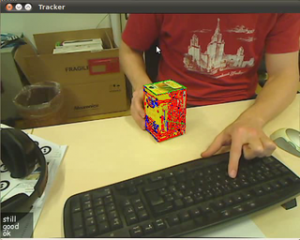

Learning and recognition phase

track-learn.ogv

Shows learning and recognition. In the first part of the video the object is placed on the table and tracked (left image), based initially on geometry edges alone. The tutor rotates the object and the tracker maps texture on the various surfaces and subsequently uses texture edges as well. Moreover SIFT features are detected for a number of views and mapped onto the object surface (right image). In the second part of the video the SIFT based recogniser locates the object on various locations on the table and initialises the tracker (the object is shown in grey in that case) where in most cases the tracker is able to „snap“ on the object.

How to learn object textures

learn-textures.avi

Shows how to learn object texture, i.e. map observed image texture onto the object surface while tracking.

References

Mörwald, T.; Prankl, J.; Richtsfeld, A.; Zillich, M.; Vincze, M. BLORT – The Blocks World Robotic Vision Toolbox Best Practice in 3D Perception and Modeling for Mobile Manipulation (in conjunction with ICRA 2010), 2010. PDF

Zillich, M. and Vincze, M. Anytimeness Avoids Parameters in Detecting Closed Convex Polygons. The Sixth IEEE Computer Society Workshop on Perceptual Grouping in Computer Vision (POCV 2008). 2008. PDF

Richtsfeld, A. and Vincze, M. Basic Object Shape Detection and Tracking using Perceptual Organization. International Conference on Advanced Robotics (ICAR), pages 1-6. 2009. PDF

Mörwald, T., Zillich, M. and Vincze, M. Edge Tracking of Textured Objects with a Recursive Particle Filter. 19th International Conference on Computer Graphics and Vision (Graphicon), Moscow, pages 96–103. 2009. PDF

Richtsfeld, A., Mörwald, T., Zillich, M. and Vincze, M. Taking in Shape: Detection and Tracking of Basic 3D Shapes in a Robotics Context. Computer Vision Winter Workshop (CVWW), pages 91–98. 2010. PDF