TUW Object Instance Recognition Dataset

This website provides annotated RGB-D pointcloud sequences of three different datasets, Willow, Challenge and TUW (static and dynamic). While the former ones were used for the ICRA Perception Challenge 2011, the TUW dataset provides more challenging scenarios with heavily cluttered indoor environments, partially/fully occluded objects – some of which multiple times present in the scene and with/-out any visual texture information. In contrast to other perception challenges (e.g. Pascal VOC, Imagenet LSVRC,…), these dataset specifically aim for robot perception where one not only has to identify objects but also calculate the 3D pose of the object relative the the robot. Each scene is therefore annotated by the identity of the objects as well as the 6DOF pose of the objects. The model database for the Willow/Challenge datasets consists of 35 object models, the one from TUW of 17. Each model is represented by RGB, depth and normal information.

Furthermore, we provide annotation for the Willow and Challenge dataset .

TUW

This dataset is composed of 15 multi-view sequences of static indoor scenes totalling 163 RGB-D frames ( and 3 dynamic scenes with 61 views in total). The number of objects in the different sequences amounts to 162, resulting in 1911 object instances (some of them totally occluded in some frames).

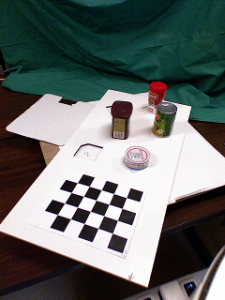

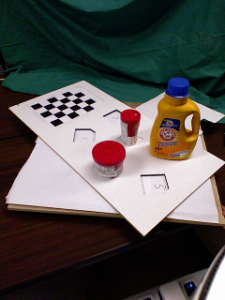

Examples

Download

Willow and Challenge Dataset

The Willow dataset is composed of 24 multi-view sequences totalling 353 RGB-D frames. The number of objects in the different sequences amounts to 110, resulting in 1628 object instances (some of them totally occluded in some frames). The Challenge dataset is composed of 39 multi-view sequences totalling 176 RGB-D frames. The number of objects in the different sequences amounts to 97, resulting in 434 object instances.

Download

[1] Aitor Aldoma, Thomas Fäulhammer, Markus Vincze, “Automation of Ground-Truth Annotation for Multi-View RGB-D Object Instance Recognition Datasets”, IEEE/RSJ Int. Conf. on Intelligent Robots and Systems (IROS), 2014 (bibtex)

[2] Thomas Fäulhammer, Aitor Aldoma, Michael Zillich, Markus Vincze, “Temporal Integration of Feature Correspondences For Enhanced Recognition in Cluttered And Dynamic Environments”, IEEE Int. Conf. on Robotics and Automation (ICRA), 2015 (PDF) (bibtex)

[3] Thomas Fäulhammer, Michael Zillich, Markus Vincze, “Multi-View Hypotheses Transfer for Enhanced Object Recognition in Clutter”, IAPR Conference on Machine Vision Applications (MVA), 2015 (PDF) (bibtex)

Examples from the database