James

Our robot butler “James” is a mobile manipulator and consists of the following main parts:

- a mobile platform to navigate through indoor environments,

- a touch screen as main user interface,

- a 7DOF human-like robot arm, and

- a hand prosthesis to grasp objects.

Platform

James is based on the BlueBotics platform “Movement” (see our Movement project) which has been developed to fulfill the project’s requirements. With a payload of 150 kg, the platform has a maximum speed of 5km/h. The platform is scaleable, it can therefore be sized according to the needs of different use cases provided by the market. The project robots@home benefits of this multi functionality. Instead of a SICK LMS-200 Laser Range Scanner, James is navigating with an Embedded Stereo Vision System (EVS). The EVS consists of two high definition wide lens cameras.

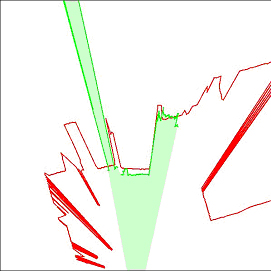

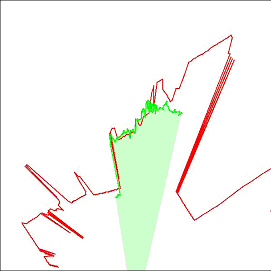

Figure 1 below shows results for generating data for obstacle avoidance using the above mentioned custom-built stereo system (two Unibrain Fire-i IEEE1394 cameras and the SRI Stereo Engine from Videre Design Inc.). The red lines in subfigures (b) and (d) represent the measurements taken with the SICK LMS 200 range finder serving as reference data. The green lines represent the data stemming from post-processing of the stereovision data. As can be seen, within the overlap of the fields of view of the laser range finder and the stereovision system, both sensors perform comparably. Subfigure (b) also shows that we encounter wrong measurements with stereovision more easily than with the laser range finder. The red markings in subfigures (a) and (c) indicate the features that have been used for calculating the results depicted in subfigures (b) and (d).

Figure 1: Visualisation of data for obstacle avoidance derived from post-processing measurements taken with a (vertical) stereovision system.

User Interface

James has a PC with a Touch Panel on his Platform top. It is intended as an easy user interface. Through the user interface, the user will be able to give James information about his environment. With the information given, James builds his own mental landscape of the new environment and is therefore able to navigate autonomously. If uncertainty occurs, James asks the user for clarification.

Arm

Due to its high payload James is able to be equipped with a light weight 7 DOF robot arm (Amtec/Schunk). The kinematic structure of this arm is similar to the human arm with two spherical joints in the shoulder and the wrist and a rotary joint at the elbow. To ensure a secure motion of the arm, Amrose’s PathPlanning tool (AMPP) is being used to calculate the path for a collision avoidance. Path planning also handle the shape of the platform to avoid self-collision.

Hand

As hand of James we exploit a modified prosthesis hand from Otto Bock HealthCare GmbH that will enable James to grasp objects. This hand is simple to control with one degree of freedom and has three tactile sensors in three fingertips to detect slippage of objects for automatically increasing grip force.

Research Project

The goal of robots@home is to provide an open mobile platform with an embedded perception system providing multi-modal sensor data for learning and mapping of the rooms and classifying the main items of furniture. Additionally, a safe and robust navigation method that finally sets the case for using the platform in homes everywhere. We use James to develop these capabilities. One test took place at IKEA .

The EU-projects GRASP and CogX study the coordination of sensing and acting abilities for learning to grasp any object.

GRASP is about developing means for robotic systems to reason about graspable targets, to explore and investigate their physical properties and finally to make artificial hands grasp any object.

By generating mechanisms able to generate a spectrum of behaviors from purely task driven information gathering to purely curiosity driven learning, CogX will enable James to handle situations within open-ended, challenging environments and shall cope with uncertainty of perception and change in scenes.

James for robots@home