Object Change Detection Dataset of Indoor Environments

The ability to detect new, moved or missing objects in large environments is key for enabling many robot tasks such as surveillance, tidying up, or maintaining order in homes or workplaces. These tasks share the commonality of operating in the same environment every day. As such, revisiting a particular environment enables robots to utilize domain knowledge and to exploit their memory from previous visits.

By storing a reference map of the environment, a robot is able to check for scene consistency and therefore detect changes on the object-level. A household robot, for example, uses the cleaned-up version of the environment as a reference map to discover objects it should tidy-up. It is only interested in new objects, but not in objects that have a permanent place, such as a a chair, a lamp or computer keyboard, which may move only slightly.

Fig.1. A household robot tidying-up a room. It compares a previously acquired reference map to the current state of the environment. Although the chair and other permanent objects moved slightly (colored in green), only the mug (colored in pink) should be detected as novel and therefore tidied-up.

Dataset

The dataset was recorded with an Asus Xtion PRO Live as part of the HSR robot. We provide reconstructions created with Voxblox[1] from five different environments ranging from partially viewed rooms (office, kitchen, living room) to complete rooms of different size (small and large). For each environment a reference and additional 5 respectively 6 additional observations exist. Between 3 and 18 objects from the YCB Object and Model Set (YCB)[2] were newly introduced in an observation. Additionally, furniture and decoration was occasionally slightly moved. The YCB objects are considered as the ground truth. We provide pointwise annotation based on the reconstruction for each observation.

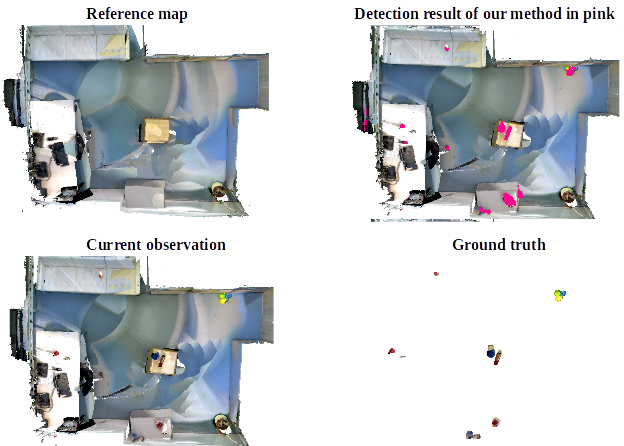

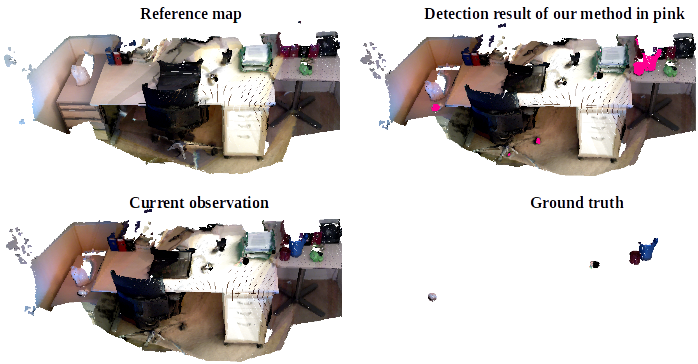

Figure 1 and Figure 2 show an example scene of the small room respectively the partial office room.

Figure 1: Example scene of the small room.

Figure 2: Example scene of the partial office room.

The dataset provided here is a cleaned-up version of the dataset we used to generate the results stated in the IROS paper. We removed all duplicate points from the reconstruction and annotation which were introduced by Voxblox.

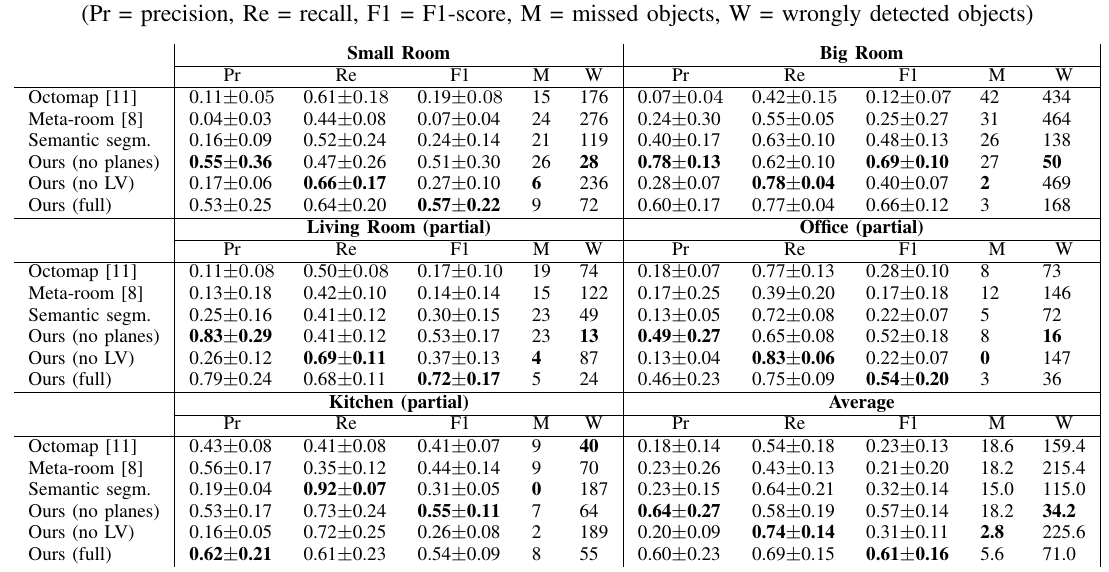

Please find the performance of our method on the cleaned-up dataset in the following table.

Dataset Structure

Room - scene1.pcd (which is the reference) - scene2.pcd - scene3.pcd - ... - Annotations (the files contain the point indices of the reconstruction corresponding to the ground truth) - scene2_GT.anno - scene3_GT.anno - ...

Download

Please contact the author if you are interested in the rosbag files to find a way how to share them (~ 300 GB). Each rosbag contains the tf-tree and the RGB and depth stream, as well as the camera intrinsic.

Research paper

If you found our dataset useful, please cite the following paper :

@inproceedings{langer_dataset,

author = {Langer, Edith and Patten, Timothy and Vincze, Markus},

title = {Robust and Efficient Object Change Detection by Combining Global Semantic Information and Local Geometric Verification},

booktitle={IEEE International Conference on Intelligent Robots and Systems (IROS)},

year={2020},

}

Contact & credits

For any questions or issues with the dataset, feel free to contact the author:

- Edith Langer – email: langer@acin.tuwien.ac.at

Other credits:

- Christian Eder: ground truth annotation

- Kevin Wolfram: ground truth annotation

References

[1] H. Oleynikova, Z. Taylor, M. Fehr, R. Siegwart, J. Nieto, Juan, Voxblox: Incremental 3D Euclidean Signed Distance Fields for On-Board MAV Planning, in Proceedings of IEEE International Conference on Intelligent Robots and Systems (IROS), 2017, pp. 1366-1373.

[2] B. Calli, A. Singh, J. Bruce, A. Walsman, K. Konolige, S. Srinivasa, P. Abbeel, A. M. Dollar, Yale-CMU-Berkeley dataset for robotic manipulation research, The International Journal of Robotics Research, vol. 36, Issue 3, pp. 261 – 268, April 2017.