Synthetic-to-real RGBD Datasets

The large amount of annotated data required to train CNNs can be very costly and represents one of the main bottlenecks for their deployments in robotics. An attractive workaround that requires no manual annotation consists in generating a large synthetic training set by rendering 3D object models with computer graphics software, such as Blender [1]. However, the difference between the synthetic (source) training data and the real (target) test data severely undermines the recognition performance of the network.

Unsupervised Domain Adaptation (DA) is a field of research that accounts for the difference between source and target data by considering them as drawn from two different marginal distributions. DA approaches provide predictions on a set of target samples using only annotated source samples, with the unlabeled target samples available transductively. In order to encourage the research in RGB-D DA, we present a synthetic counterpart to two popular object datasets, RGB-D Object Dataset (ROD) [2] and HomeBrewedDB (HB) [3], that we call synROD and synHB, respectively.

Why not using existing datasets?

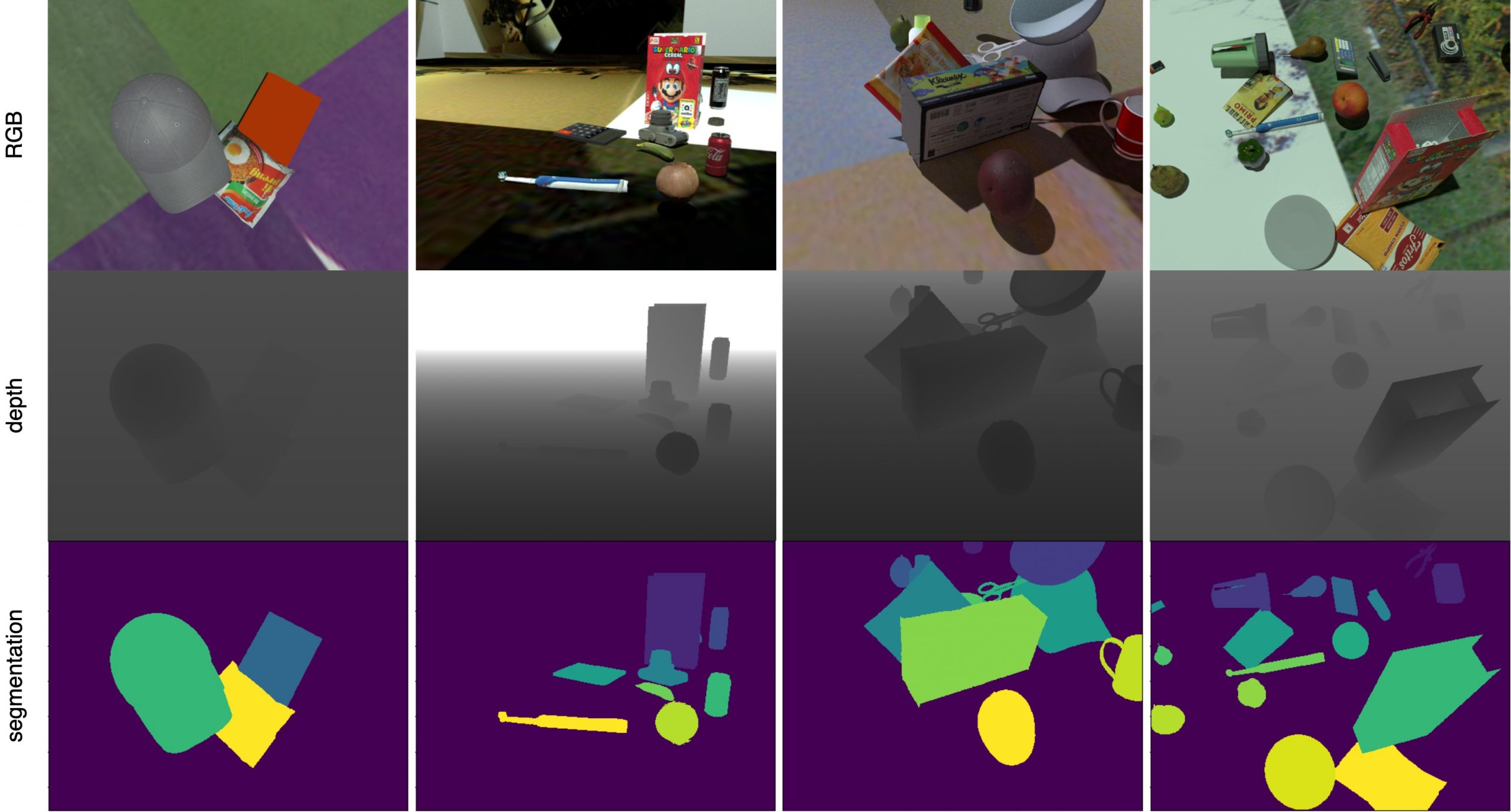

Fig.2: Examples of rendered scenes from synROD with increasing level of clutter from left to right. For each, we showcase the RGB, raw depth and segmentation mask image.

RGB-D DA has not been explored in the literature yet, so there are no standard benchmark datasets to evaluate methods developed for this purpose. The main challenge of defining a dataset to evaluate DA methods is to identify two distinct sets of data that exhibit the same annotated classes but have been collected in different conditions. In particular, we are interested in the synthetic-to-real domain shift, where the source domain presents RGB-D synthetic data, while the target domain presents RGB-D real data. Existing 3D object datasets, such as ModelNet [4] and ShapeNet [5], do not have a corresponding real dataset that shares the same classes. In addition, the lack of texture for some models makes them unusable for our purpose where we are interested in both the shape (depth) and the texture (color) of the object. Other datasets, such as LineMOD [6], are not very interesting for classification purposes since they only present a few object instances, all with very discernible appearance.

synROD

Since its release in 2011, ROD has become the main reference dataset for RGB-D object recognition in the robotics community. It contains 41,877 RGB-D images of 300 objects commonly found in house and office environments grouped in 51 categories. Each object is recorded on a turn-table with the RGB-D camera placed at approximately one-meter distance at 30°, 45° and 60° angle above the horizon. As mentioned in section~\ref{sec:dataset}, synROD is a synthetic dataset created using object models from the same categories as ROD collected from publiclz available 3D model repositories and rendered in Blender (see details below). To make the two datasets comparable, we randomly select and extract approximately 40,000 objects crops from synROD to match the dimensions of ROD. For the purpose of evaluating RGB-D DA methods, we can consider synROD as the synthetic source dataset and ROD as the real target dataset.

Selecting 3D Object Models

We query the objects from the free catalogs of public 3D model repositories, such as 3D Warehouse and Sketchfab, and only keep models that present texture information to be able to render the RGB modality in addition to the depth. All models are processed to harmonize the scale and canonical pose prior to the rendering stage. The final result of the selection stage is a set of 303 textured 3D models from the 51 object categories of ROD, for an average of about 6 models per category.

Rendering 2.5D scenes

We render 2.5D scenes using a ray-tracing engine in Blender to simulate photorealistic lighting. Each scene consists of a rendered view of a randomly selected subset of the models placed on a 1.2 x 1.2 meter virtual plane. The poses of the camera and the light source are sampled from an upper hemisphere of the plane with varying radius. To obtain natural and realistic object poses, each model is dropped on the virtual plane using a physics simulator. The number of objects in each scene varies from five to 20 to create different levels of clutter. To ensure a balanced dataset, we condition the selection of the models to insert in every scene to the number of past appearances. The background of the virtual space containing the objects is randomized by using images from the MS-COCO dataset [7]. We rendered approximately 30,000 RGB-D scenes with semantic annotation at pixel level (see figure 3).

synHB

HB is a dataset used for 6D pose estimation that features 17 toy, 8 household and 8 industry-relevant objects, for a total of 33 instances. HB provides high-quality object models reconstructed using a 3D scanner and 13 validation sequences. Each sequence contains three to eight objects on a large turntable and is recorded using two RGB-D cameras at 30° and 45° angle above the horizon. To re-purpose this dataset for the instance recognition problem, we extract the object crops from all the validation sequences, for a total of 22,935 RGB-D samples, and we refer to it as realHB. We create a synthetic version of this dataset by rendering the reconstructed object models using the same procedure used for synROD, and we refer to it as synHB. In order to make the two datasets comparable, we randomly select and extract about 25,000 objects crops from synHB to match the dimensions of realHB. For the purpose of evaluating RGB-D DA methods, we can consider synHB as the synthetic source dataset and realHB as the real target dataset.

Downloads

Research paper

If you found our dataset useful, please cite the following paper:

@article{loghmani2020unsupervised,

title={Unsupervised Domain Adaptation through Inter-modal Rotation for RGB-D Object Recognition},

author={Loghmani, Mohammad Reza and Robbiano, Luca and Planamente, Mirco and Park, Kiru and Caputo, Barbara and Vincze, Markus},

journal={arXiv preprint arXiv:2004.10016},

year={2020}}

Contact & credits

For any questions or issues feel free to contact us:

- Mohammad Reza Loghmani – email: loghmani@acin.tuwien.ac.at

- KiRu Park: – email: park@acin.tuwien.ac.at

References

[1] Blender,” http://www.blender.org, accessed: 2020-01-30.

[2] K. Lai, L. Bo, and D. Fox, “Unsupervised feature learning for 3Dscene labeling”, in ICRA, 2014, pp. 3050–3057.

[3] R. Kaskman, S. Zakharov, I. Shugurov, and S. Ilic, “Homebreweddb: RGB-D dataset for 6d pose estimation of 3d objects”, in ICCV Workshops, 2019

[4] Z. Wu, S. Song, A. Khosla, F. Yu, L. Zhang, X. Tang, and J. Xiao,“3D shapenets: A deep representation for volumetric shapes”, in CVPR, 2015, pp. 1912–1920.

[5] L. Yi, L. Shao, M. Savva, H. Huang, Y. Zhou, Q. Wang, B. Graham,M. Engelcke, R. Klokov, V. Lempitskyet al., “Large-scale 3D shape reconstruction and segmentation from shapenet core55”, arXiv preprint arXiv:1710.06104, 2017.

[6] S. Hinterstoisser, V. Lepetit, S. Ilic, S. Holzer, G. Bradski, K. Konolige, and N. Navab. “Model based training, detection and pose estimation of texture-less 3d objects in heavily cluttered scenes.” in ACCV, 2012, pp. 548-562.

[7] T.-Y. Lin, M. Maire S. Belongie, J. Hays, P. Perona, D. Ramanan, P. Dollár, and C. L. Zitnick, “Microsoft coco: Common objects incontext,” in ECCV, 2014, pp. 740–755.